Overview

My research focuses on generative AI security & safety with an emphasize on scalability and efficiency. Additionally, I explore infrastructure solutions to support the seamless deployment of these algorithms at scale.

Infrastructure Support

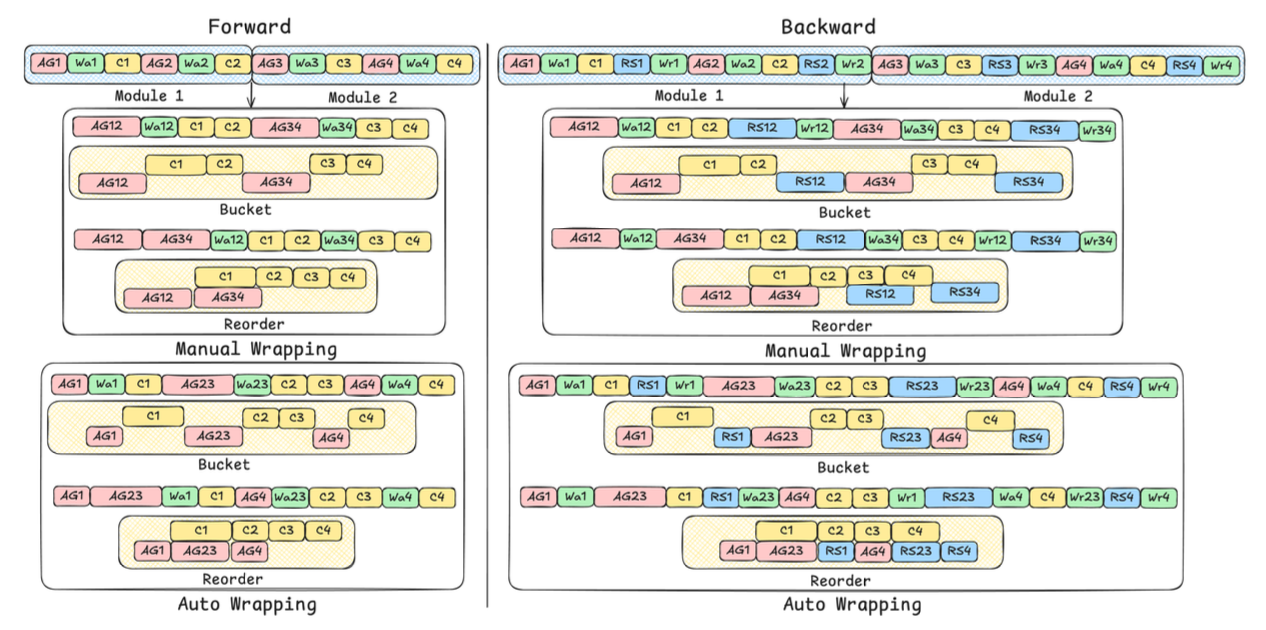

SimpleFSDP proposed a PyTorch-native compiler-based Fully Sharded Data Parallel (FSDP) framework, which has a simple implementation for maintenance and composability, allows full computation-communication graph tracing, and brings performance enhancement via compiler backend optimizations.

SimpleFSDP proposed a PyTorch-native compiler-based Fully Sharded Data Parallel (FSDP) framework, which has a simple implementation for maintenance and composability, allows full computation-communication graph tracing, and brings performance enhancement via compiler backend optimizations.

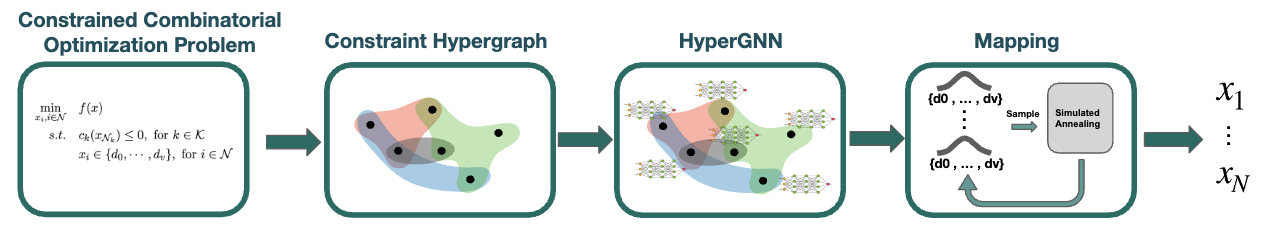

HypOP (Nature MI'24) proposed a scalable unsupervised learning-based optimization method for a wide spectrum of constrained combinatorial optimization problems with arbitrary cost functions.

HypOP (Nature MI'24) proposed a scalable unsupervised learning-based optimization method for a wide spectrum of constrained combinatorial optimization problems with arbitrary cost functions.

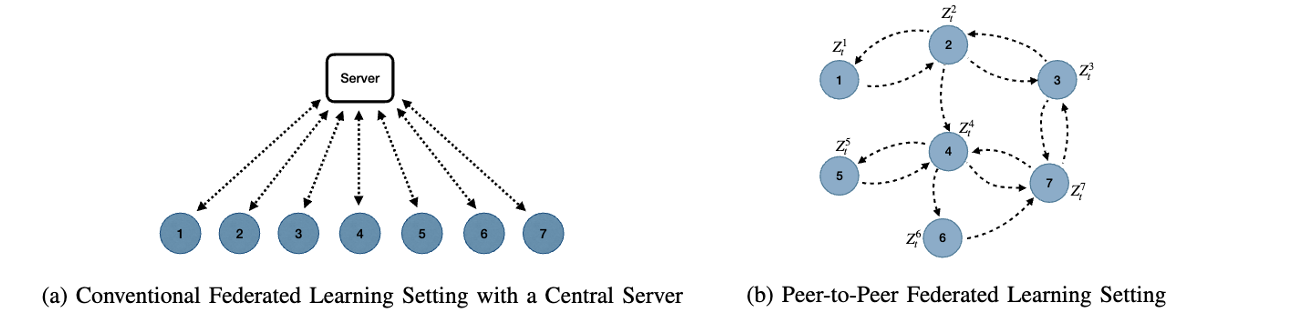

SureFED proposed a new peer-to-peer federated learning algorithm which is robust to a variety of data and model poisoning attacks utilizing uncertainty quantification.

SureFED proposed a new peer-to-peer federated learning algorithm which is robust to a variety of data and model poisoning attacks utilizing uncertainty quantification.

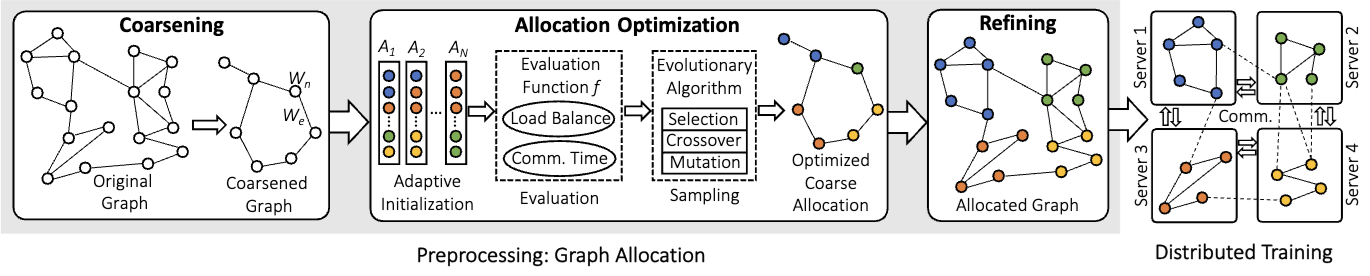

AdaGL (DAC'23) proposed an adaptive-learning based graph-allocation algorithm to minimize the communication overhead in distributed graph neural network training.

AdaGL (DAC'23) proposed an adaptive-learning based graph-allocation algorithm to minimize the communication overhead in distributed graph neural network training.

LLM Security

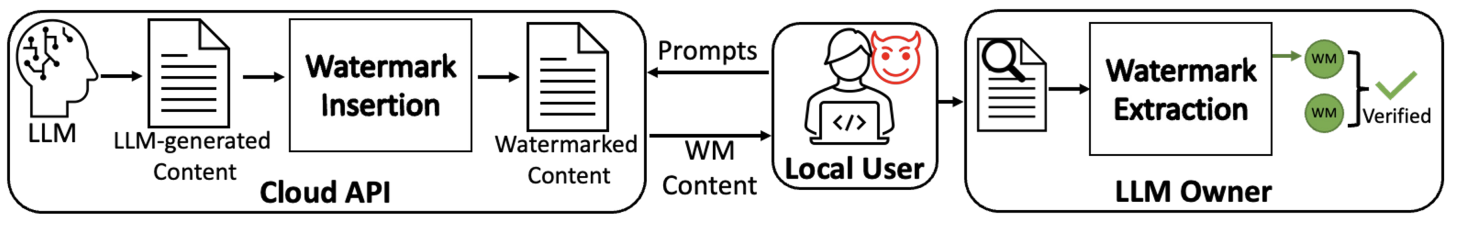

REMARK-LLM (USENIX Security'24) proposed a watermarking framework for large language model's generated content that

has high capacity, high transferability, and robustness.

REMARK-LLM (USENIX Security'24) proposed a watermarking framework for large language model's generated content that

has high capacity, high transferability, and robustness.

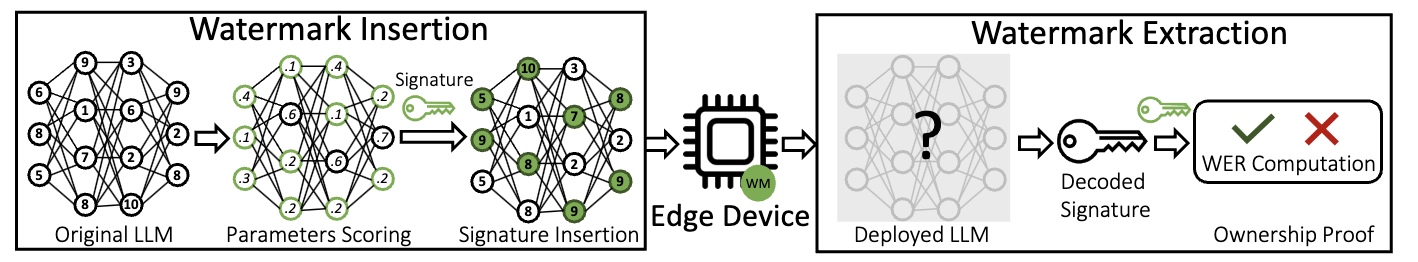

EmMark (DAC'24) proposed a novel watermarking framework for protecting the intellectual property (IP) of embedded large language models deployed on resource-constrained edge devices.

EmMark (DAC'24) proposed a novel watermarking framework for protecting the intellectual property (IP) of embedded large language models deployed on resource-constrained edge devices.

LLM Application Security

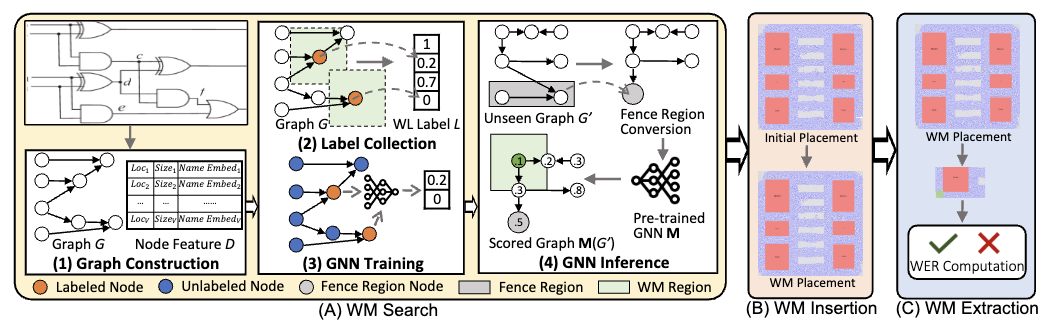

AutoMarks (MLCAD'24) proposed an automated and transferable watermarking framework that leverages graph neural networks to reduce the watermark search overheads during the placement stage.

AutoMarks (MLCAD'24) proposed an automated and transferable watermarking framework that leverages graph neural networks to reduce the watermark search overheads during the placement stage.

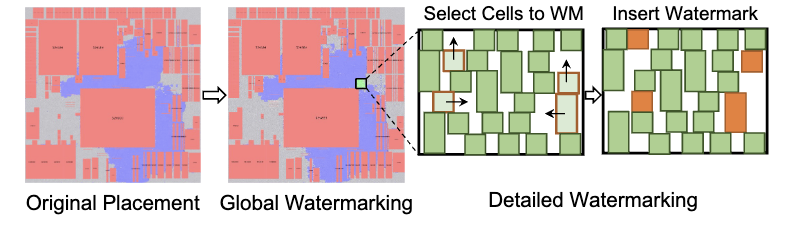

ICMarks (TCAD'25) proposed the first quality-preserving and robust watermarking framework for modern physical design to safeguard IC layout IP.

ICMarks (TCAD'25) proposed the first quality-preserving and robust watermarking framework for modern physical design to safeguard IC layout IP.